In the world of artificial intelligence, the progress from one generation of hardware to the next can redefine what’s possible. The transition from Nvidia’s Volta architecture to the Ampere architecture marked one such leap. The Nvidia V100, built on Volta, was the undisputed king of the data center, powering a revolution in deep learning. Its successor, the Nvidia A100, built on Ampere, didn’t just iterate; it fundamentally changed the game for AI training and high-performance computing (HPC).

For organizations and researchers, understanding the practical differences between the venerable V100 and the powerful A100 (specifically the 40GB model) is crucial for making strategic hardware decisions. This article offers a detailed comparison, exploring the architectural advancements, real-world performance gains, cost considerations, and how to choose the right GPU for your AI tasks.

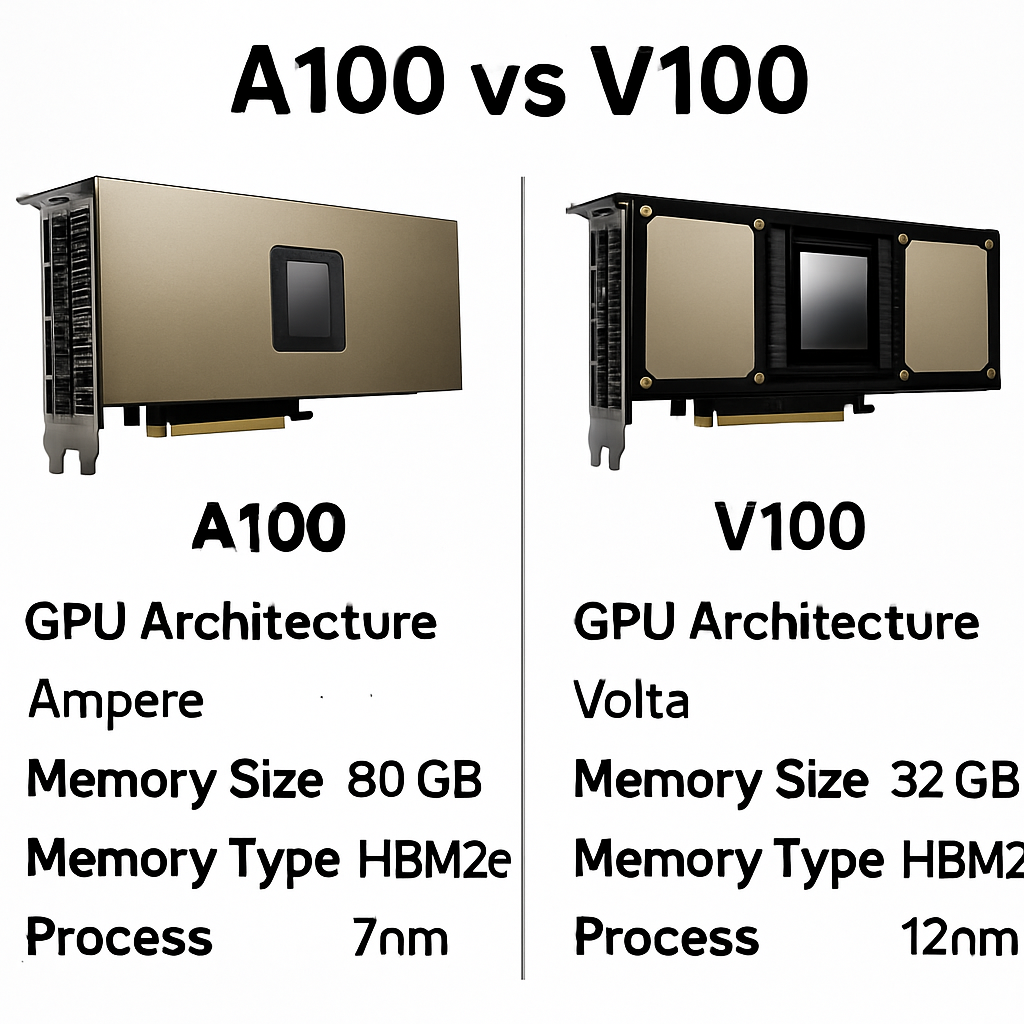

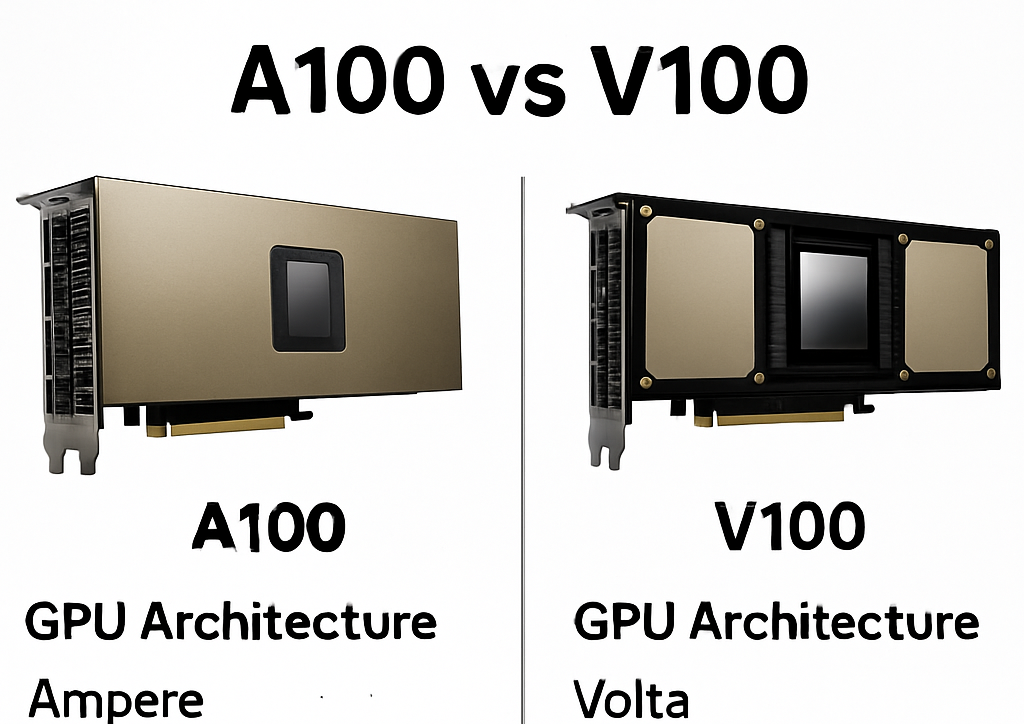

The Contenders: V100 and A100 at a Glance

The Nvidia V100 Tensor Core GPU, launched in 2017, was a trailblazer. It introduced Tensor Cores to the world, dramatically accelerating deep learning training and becoming the foundational infrastructure for the AI boom.

The Nvidia A100 Tensor Core GPU, which debuted in 2020, was Nvidia’s next major step. The Ampere architecture brought significant improvements across the board, designed to handle larger models and more complex datasets with greater efficiency.

| Feature | Nvidia V100 (SXM2) | Nvidia A100 (40GB SXM4) |

|---|---|---|

| Architecture | Volta | Ampere |

| Release Year | 2017 | 2020 |

| GPU Memory | 16GB/32GB HBM2 | 40GB HBM2 |

| Memory Bandwidth | 900 GB/s | 1.6 TB/s |

| Tensor Cores | 1st Generation | 3rd Generation |

| FP32 Performance | 15.7 TFLOPS | 19.5 TFLOPS |

| Tensor Performance (FP16) | 125 TFLOPS | 312 TFLOPS |

| NVLink | 2nd Generation (300 GB/s) | 3rd Generation (600 GB/s) |

| Multi-Instance GPU (MIG) | No | Yes (up to 7 instances) |

Architectural Deep Dive: From Volta to Ampere

The leap from the V100’s Volta architecture to the A100’s Ampere architecture brought several transformative changes crucial for AI.

Third-Generation Tensor Cores & TF32

The A100 features third-generation Tensor Cores, which are vastly more powerful and flexible than the first-generation cores in the V100. The most significant innovation was the introduction of the TensorFloat-32 (TF32) data format. TF32 provides a middle ground between the precision of FP32 and the speed of FP16. It allows the A100 to achieve up to 20x the performance of the V100 for FP32 workloads with zero code changes, a massive boost for traditional HPC and AI training tasks that rely on this precision.

Sparsity Acceleration

Modern neural networks often have many weights that are close to zero. The A100 can exploit this “sparsity” to double the throughput of Tensor Core operations. It can ignore the unnecessary zero values in the calculations, effectively halving the work required. The V100 does not have this hardware-level sparsity acceleration.

Multi-Instance GPU (MIG)

Perhaps one of the most impactful features for cloud and enterprise environments is Multi-Instance GPU (MIG). The A100 can be partitioned into up to seven independent GPU instances, each with its own dedicated memory, cache, and compute cores. This allows for much finer-grained resource allocation and can dramatically improve GPU utilization, as multiple users and jobs can run securely and in parallel on a single A100. The V100 lacks this capability, operating as a single, monolithic GPU.

Increased Memory and Bandwidth

The A100 40GB model offers more than double the memory of the standard 16GB V100 and a significant bump over the 32GB version. More importantly, its memory bandwidth is nearly 70% higher (1.6 TB/s vs. 900 GB/s). This allows the A100 to process much larger models and datasets without being bottlenecked by data access speeds.

Performance in the Real World: AI Benchmarks

These architectural advancements result in dramatic performance improvements for AI workloads.

AI Training

For AI training, the A100 is a powerhouse. Across various benchmarks, the A100 consistently delivers 2.5x to 5x the performance of the V100.

For example, in training the popular BERT model for natural language processing, a cluster of A100s can complete the task in a fraction of the time it takes a similarly sized cluster of V100s. The combination of faster Tensor Cores, TF32, and higher memory bandwidth allows for faster iteration and the ability to train more complex models. Major AI research labs and cloud providers quickly adopted the A100 upon its release to accelerate projects like the development of large language models, which were often severely limited by the training times on V100 hardware.

AI Inference

The performance gains for inference are even more stark. Nvidia’s benchmarks show the A100 outperforming the V100 by up to 7x on inference tasks. The A100’s ability to handle sparse models and its higher throughput make it ideal for deploying AI at scale. For applications like real-time translation or large-scale image recognition, this means a single A100 can serve significantly more users than a V100, directly impacting operational efficiency.

Cost vs. Performance: Making the Financial Case

While an A100 GPU is more expensive than a V100 upfront, its superior performance often leads to a lower Total Cost of Ownership (TCO).

Cloud and On-Premise Pricing

On cloud platforms, an A100 instance is typically more expensive per hour than a V100 instance. However, if the A100 can complete a training job 3-4 times faster, the total compute cost for that job will be significantly lower.

For on-premise deployments, the calculation is more complex but often yields the same conclusion. While the initial capital expenditure for A100s is higher, their superior performance means fewer GPUs are needed to achieve the same level of throughput. This leads to savings in server costs, power, cooling, and data center space. The MIG feature further enhances the value proposition, allowing for the consolidation of diverse workloads onto fewer GPUs, maximizing the return on investment.

How to Make the Choice: A Practical Guide

The decision between the A100 and V100 depends on your specific circumstances.

When to Choose the A100:

- New Deployments and Upgrades: If you are building a new AI infrastructure or upgrading an existing one, the A100 is the clear choice for its superior performance, efficiency, and features like MIG.

- Large-Scale AI Training: For training large, complex models, the A100’s speed will dramatically reduce training times and costs.

- High-Volume Inference: If you are deploying AI services at scale, the A100’s inference throughput provides a significant advantage in both performance and cost per inference.

- Multi-Tenant Environments: The MIG feature makes the A100 the ideal choice for cloud providers or enterprises that need to serve multiple users or departments.

When the V100 Might Still Be a Consideration:

- Existing Infrastructure: If you have an existing V100 deployment and your workloads are meeting performance and cost targets, an immediate upgrade might not be necessary.

- Strict Budget Constraints: For projects with extremely tight budgets where “good enough” performance is acceptable, sourcing V100s on the secondary market or from cloud providers at a lower cost could be a viable option.

- Smaller-Scale Workloads: For smaller models or less demanding tasks where the full power of the A100 might be underutilized, the V100 can still be a capable performer.

Conclusion

The Nvidia A100 represents a monumental leap forward from the V100. Its Ampere architecture delivered a step-change in performance and introduced critical features like TF32 and MIG that have redefined efficiency in the data center. While the V100 was a legendary GPU that paved the way for the modern AI era, the A100’s raw power and advanced feature set make it the superior choice for nearly all new AI and HPC deployments. For organizations serious about AI, investing in the A100 platform yields not just an incremental speedup but a transformational capability to build and deploy the next generation of artificial intelligence.