Introduction: Server GPU

In the high-stakes world of artificial intelligence, high-performance computing, and advanced visualization, server-grade GPUs are the undisputed powerhouses. But raw silicon alone is useless. The true magic – the ability to train massive models, render complex scenes in real-time, or simulate intricate physical phenomena – lies in the sophisticated layers of software that sit between the hardware and the end-user application. This intricate ecosystem, the Server GPU Software Stack, is the unsung hero enabling modern computational breakthroughs. Let’s break down its essential components: Drivers, Frameworks, Containers, and Orchestration.

1. The Foundation: GPU Drivers & Low-Level APIs

Think of drivers as the essential translators. They allow the operating system and higher-level software to communicate directly with the complex GPU hardware. For server environments, specialized drivers are critical:

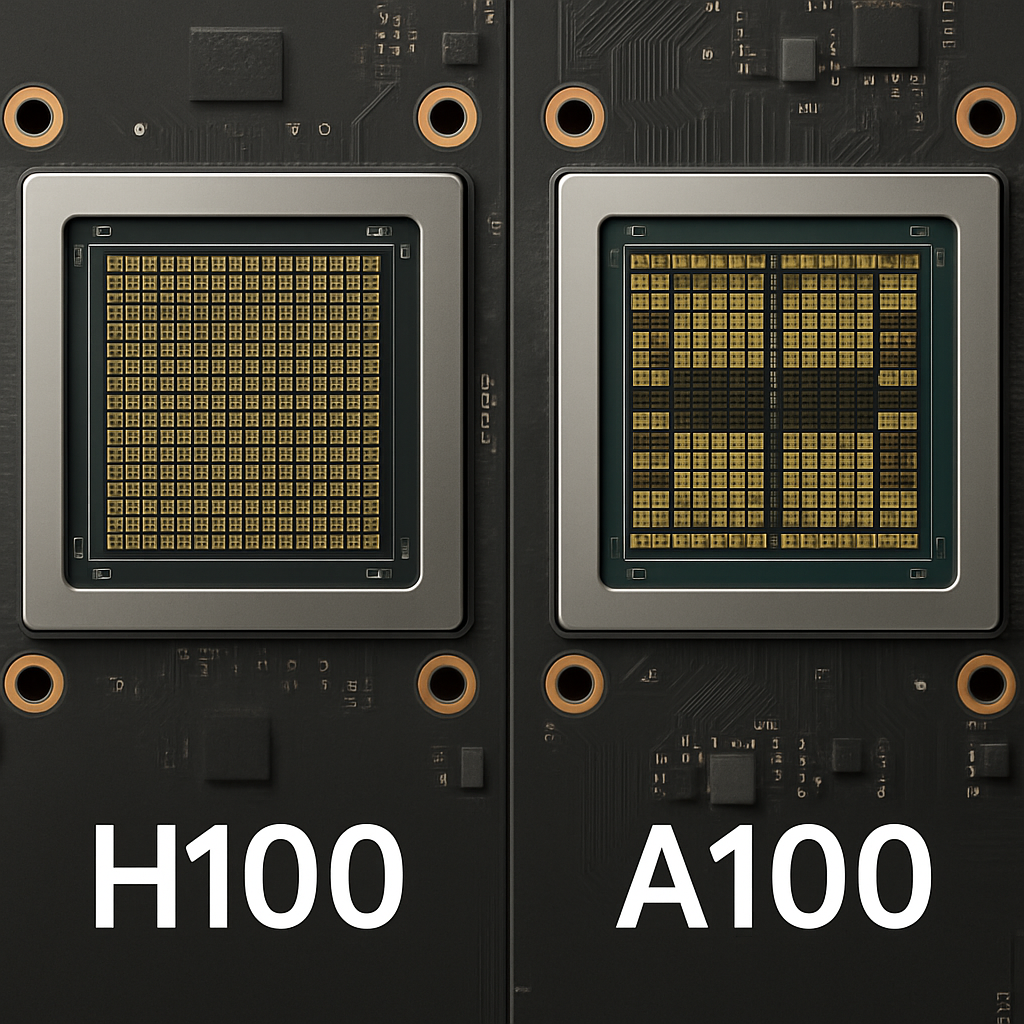

- NVIDIA vGPU & CUDA: The dominant force. NVIDIA vGPU (Virtual GPU) technology is pivotal in virtualized/cloud environments, allowing physical GPUs (like A100, H100, L40S) to be securely partitioned and shared among multiple virtual machines or users. Underpinning almost all NVIDIA acceleration is CUDA (Compute Unified Device Architecture). It’s a parallel computing platform and programming model that gives developers direct, low-level access to the GPU’s virtual instruction set and parallel computational elements. CUDA is the bedrock for most high-performance GPU computing.

- AMD ROCm: AMD’s open-source answer to CUDA. The Radeon Open Compute (ROCm) platform provides the drivers, tools, libraries (like HIP for CUDA code porting), and compiler support needed to leverage AMD Instinct GPUs (MI300X, etc.) for HPC and AI workloads. Its open nature fosters flexibility and avoids vendor lock-in.

- Intel oneAPI: Intel’s unified programming model designed for heterogeneous architectures (CPUs, GPUs, FPGAs, etc.). For its GPUs (like Max Series), oneAPI provides open standards-based libraries (oneDNN, oneMKL) and the Data Parallel C++ (DPC++) language, offering a cross-architecture approach to GPU acceleration. Intel’s oneVPL handles video processing.

Why this layer matters: Without robust, optimized drivers and low-level APIs like CUDA, ROCm, or oneAPI, the immense computational power of server GPUs remains inaccessible. They handle memory management, task scheduling, and direct hardware control.

2. The Productivity Boosters: AI/Compute Frameworks

Building complex applications directly on CUDA or ROCm is challenging. High-level frameworks abstract this complexity, providing pre-built functions, neural network layers, and optimized operations:

- PyTorch: Hugely popular in research and increasingly in production due to its dynamic computation graph (eager execution), Pythonic nature, and extensive ecosystem (TorchServe, TorchScript). Favored for its flexibility and debugging ease.

- TensorFlow: A mature, production-ready framework known for its scalability, robust deployment tools (TensorFlow Serving, TensorFlow Lite), and static computation graph (though eager mode is available). Widely used in large-scale enterprise deployments.

- CUDA-X: Not a single framework, but a comprehensive collection of libraries built on CUDA by NVIDIA. This includes:

- cuDNN: Deep Neural Network library (used by PyTorch/TF).

- cuBLAS: Basic Linear Algebra Subprograms.

- NCCL: Optimized collective communication for multi-GPU/multi-node.

- cuFFT: Fast Fourier Transforms.

- TensorRT: High-performance deep learning inference optimizer and runtime.

- OpenCL (Open Computing Language): An open, cross-vendor standard for parallel programming across heterogeneous platforms (CPUs, GPUs, FPGAs). While less dominant in cutting-edge AI than CUDA/ROCm, it remains relevant for portable code and specific non-NVIDIA/AMD accelerators.

Why this layer matters: Frameworks dramatically accelerate development. They provide the building blocks for AI models (training & inference), scientific simulations, and data analytics, leveraging the underlying drivers/APIs for peak GPU performance without requiring developers to be GPU assembly-language experts.

3. Taming Complexity: Containerization

Deploying and managing the intricate dependencies of GPU software stacks (specific driver versions, CUDA/ROCm, framework versions, Python packages) across diverse environments is a nightmare. Containerization solves this:

- Docker Images: The standard unit of containerization. Images encapsulate an application and all its dependencies (OS libraries, frameworks, drivers in user-space). This ensures consistency from a developer’s laptop to a testing server to a massive production cluster. “It works on my machine” becomes “It works everywhere.”

- NVIDIA NGC (NVIDIA GPU Cloud): A curated registry of optimized, certified, and secure containers for AI, HPC, and visualization. NGC provides pre-built containers for PyTorch, TensorFlow, RAPIDS, Clara, and many more, all tuned for NVIDIA GPUs and updated regularly. This is a massive productivity boost, eliminating the pain of building complex GPU environments from scratch.

- Kubernetes Operators: Managing stateful, complex applications like GPU-accelerated databases or AI platforms on Kubernetes requires more than basic pods. Operators use custom resources (CRDs) and controllers to automate the deployment, scaling, management, and lifecycle operations of these applications within a K8s cluster. GPU-specific operators handle complexities like device plugin configuration, topology awareness, and workload scheduling.

Why this layer matters: Containers provide isolation, reproducibility, and portability. NGC offers trusted, optimized building blocks. Kubernetes Operators enable scalable, resilient, and automated management of GPU workloads in modern cloud-native environments.

4. Command & Control: Orchestration & Management Tools

Managing fleets of GPU servers, scheduling workloads efficiently, ensuring high availability, and providing user access requires enterprise-grade orchestration:

- Kubernetes (K8s): The de facto standard for container orchestration. Its extensibility allows integration with GPU resources via device plugins (e.g., NVIDIA k8s-device-plugin). K8s schedules GPU workloads across nodes, manages resources, and enables scaling. Essential for cloud-native AI/ML platforms.

- Red Hat OpenShift: An enterprise Kubernetes platform adding crucial features for production: enhanced security, streamlined developer workflows, integrated CI/CD, centralized policy management, and multi-cluster operations. Simplifies running complex, stateful GPU workloads at scale with enterprise support.

- VMware vSphere with Tanzu: Brings Kubernetes capabilities into the established VMware virtualization ecosystem. vSphere manages the underlying GPU servers (including NVIDIA vGPU profiles). Tanzu allows deploying and managing Kubernetes workloads alongside traditional VMs on the same infrastructure, leveraging vSphere’s robust resource management, high availability (HA), and disaster recovery (DR) features for GPU workloads.

Why this layer matters: Orchestration tools are the air traffic control system. They ensure GPU resources are utilized efficiently, workloads are placed optimally (considering GPU type/count, memory, topology), the system remains resilient, and users can deploy and manage their applications effectively, especially at scale.

The Synergistic Stack: Powering Innovation

The true power emerges when these layers work seamlessly together:

- Drivers/APIs unlock the raw silicon.

- Frameworks provide accessible tools to harness that power for specific tasks.

- Containers package everything reliably for deployment anywhere.

- Orchestration manages the deployment and lifecycle efficiently across clusters.

Optimizing this stack is paramount. Using tuned NGC containers on Kubernetes clusters managed by OpenShift or vSphere/Tanzu, accessing NVIDIA GPUs via vGPU profiles, and running PyTorch/TensorFlow models built with CUDA-X libraries represents a highly optimized, enterprise-ready pipeline.

Conclusion

The server GPU software stack is far more than just installing a driver. It’s a carefully integrated hierarchy of technologies, each playing a vital role in transforming powerful hardware into accessible, manageable, and scalable computational capability. Understanding the roles of drivers like vGPU/CUDA/ROCm/oneAPI, frameworks like PyTorch and TensorFlow, containerization with Docker and NGC, and orchestration with Kubernetes, OpenShift, and vSphere is essential for anyone designing, deploying, or managing modern accelerated computing infrastructure. Investing in mastering this stack is investing in the engine room of tomorrow’s innovations.