Introduction

When you hear “GPU,” gaming graphics cards likely spring to mind – flashy, powerful beasts designed to render lifelike worlds at blazing speeds. But there’s another, arguably more critical class of GPUs operating behind the scenes: Server GPUs. These aren’t for fragging noobs; they’re the unsung engines driving the modern digital economy. Let’s dive into what server GPUs are, how they fundamentally differ from their consumer cousins, and why they’re indispensable.

Defining Server-Grade GPUs: Built for Business Criticality

At their core, both server GPUs and consumer GPUs (like NVIDIA GeForce RTX or AMD Radeon RX) are processors optimized for parallel computation. However, their design philosophies and target environments are worlds apart.

- Consumer GPUs: Prioritize raw gaming performance (high frame rates, high clock speeds), visual fidelity (ray tracing cores), and consumer affordability/form factors (single PCIe slot, RGB lighting). Reliability is important, but occasional crashes are inconvenient, not catastrophic.

- Server GPUs (Data Center GPUs): Prioritize computational throughput, reliability, scalability, manageability, and efficiency in demanding 24/7 environments. They are engineered for data centers and enterprise workloads where uptime is paramount, data integrity is non-negotiable, and tasks involve massive parallel processing far beyond graphics.

Core Architectural Differences: The DNA of Reliability & Scale

The divergence starts at the silicon level and permeates every aspect of server GPU design:

- ECC Memory (Error-Correcting Code): This is critical. Server GPUs incorporate specialized VRAM that can detect and correct single-bit memory errors on the fly. Consumer GPUs typically lack full ECC. In a data center processing petabytes of scientific data, financial transactions, or training multi-billion parameter AI models, a single uncorrected memory error could corrupt results or crash a critical workload costing millions. ECC ensures data integrity.

- Enhanced Reliability & Durability: Server GPUs are built for continuous operation under heavy load:

- Higher Quality Components: More robust capacitors, power phases, and PCBs designed for thermal cycling and long-term stability.

- Extended Lifetimes: Qualified for 24/7 operation for years, far exceeding the typical usage patterns of a gaming card.

- Rigorous Validation: Undergo extensive testing against enterprise server platforms and software stacks.

- Virtualization Support (vGPU, MIG, SRIOV): Server GPUs are designed to be shared. Technologies like:

- NVIDIA vGPU / AMD MxGPU / Intel SRIOV: Allow a single physical GPU to be securely partitioned into multiple virtual GPUs (vGPUs), each assigned to a different virtual machine (VM) or container. This enables efficient resource utilization in cloud environments and virtual desktop infrastructure (VDI).

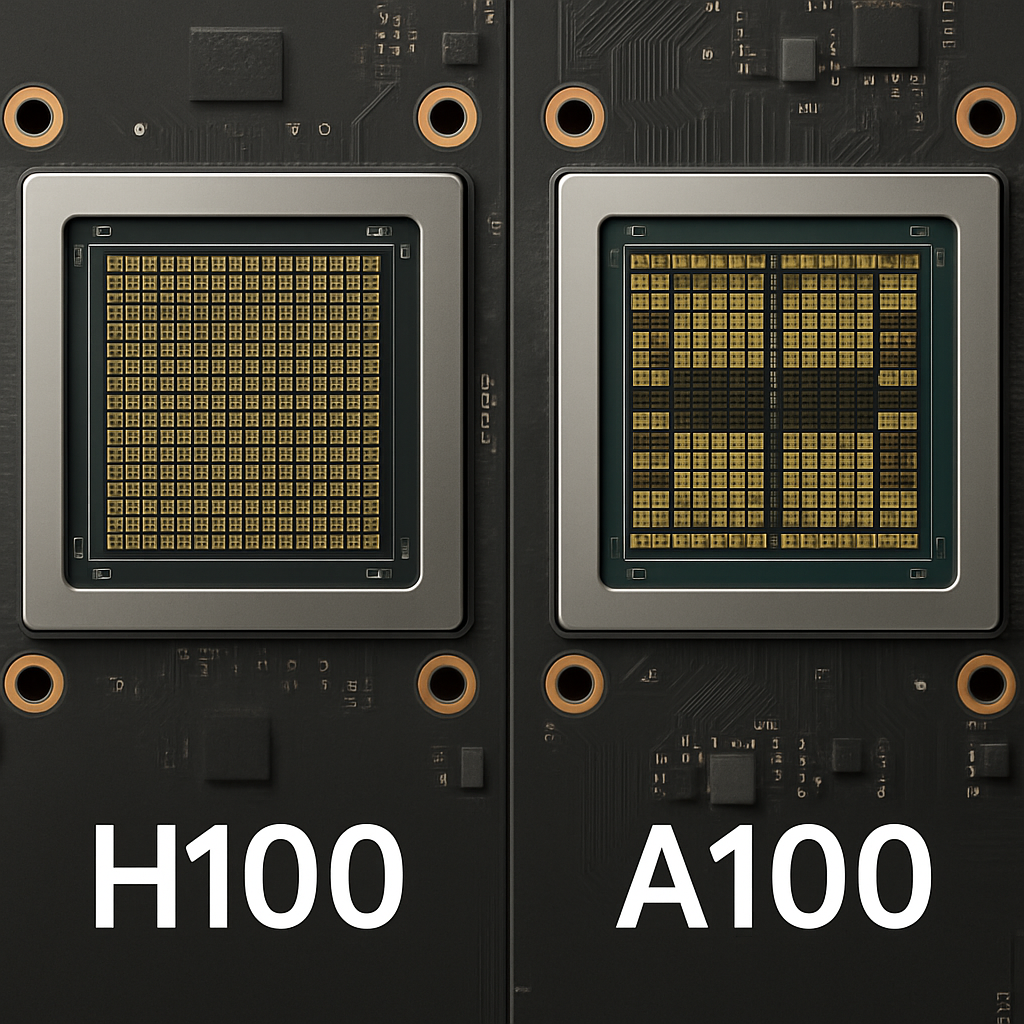

- NVIDIA MIG (Multi-Instance GPU): On Ampere (A100) and Hopper (H100) architectures, physically partitions the GPU into smaller, isolated instances with guaranteed quality-of-service (QoS), perfect for serving multiple smaller AI inference jobs simultaneously.

- Optimized Form Factors & Cooling:

- Passive Cooling: Most server GPUs rely on passive heatsinks (no onboard fans). Cooling is provided by the server chassis’s high-throughput, redundant fans blowing air front-to-back. This improves reliability (no fan failure point) and density.

- Dense Form Factors: Designs like SXM (used in NVIDIA’s DGX systems – A100/H100 SXM) ditch the PCIe slot entirely for direct board-to-board connections, enabling higher power delivery, bandwidth, and packing more GPUs per server. PCIe cards (like L4, L40S, MI300X PCIe, Intel Max) are also common but designed for server airflow.

- Single/Dual Slot: Focus on fitting densely into 1U/2U servers.

- Optimized for Specific Workloads: While powerful, server GPUs often trade peak gaming clock speeds for:

- Higher Memory Bandwidth: Crucial for feeding massive AI models or scientific datasets (e.g., HBM2e/HBM3 on high-end cards).

- Specialized Cores: Abundant tensor cores (NVIDIA) or matrix cores (AMD) for AI acceleration, or FP64 cores for scientific computing.

- Software Stack: Supported by enterprise-grade drivers, management tools (NVIDIA DCGM, AMD ROCm), and optimized libraries (CUDA, cuDNN, ROCm libraries) for professional workloads.

Key Players & Their Flagships

The server GPU market is fiercely competitive, driven by the explosion of AI and accelerated computing:

- NVIDIA: The dominant leader.

- High-Performance AI/Compute: H100 (Hopper), A100 (Ampere) – SXM & PCIe variants. The workhorses for AI training and large-scale inference.

- Versatile Data Center: L40S (Ada Lovelace) – Optimized for AI inference, graphics-intensive VDI, rendering, media transcoding. L4 – Efficient entry-level AI inference and VDI.

- AMD: Gaining significant traction.

- Instinct MI300 Series: The flagship, particularly the MI300X. Combines CPU and GPU chiplets (XDNA AI Engine) with massive HBM3 memory capacity and bandwidth, posing a strong challenge to NVIDIA in the AI accelerator space.

- Intel: Aggressively entering the market.

- Max Series GPU (Ponte Vecchio): A complex chiplet-based design targeting high-performance computing (HPC) and AI, notably powering the Aurora supercomputer. Focuses on FP64 performance and scalability.

Why They Exist: Meeting the Demands of the Modern Data Center

Consumer GPUs simply can’t cut it in the enterprise. Server GPUs exist because data centers and critical workloads demand:

- Reliability & Uptime: Enterprises cannot afford crashes or data corruption. Server GPUs are built for continuous, error-resistant operation. Downtime is measured in dollars lost per minute.

- Massive Parallel Processing: Modern workloads are inherently parallel:

- Artificial Intelligence / Machine Learning: Training complex neural networks and deploying inference at scale requires immense parallel math, accelerated by tensor cores.

- High-Performance Computing (HPC): Scientific simulations (climate modeling, drug discovery, CFD), financial modeling, genomics.

- Data Analytics & Big Data: Accelerating database queries, real-time analytics on massive datasets.

- Virtualization & Cloud: Delivering GPU power to VMs and containers securely and efficiently (vGPU).

- Rendering & Simulation: Creating complex visual effects, architectural visualizations, product design simulations.

- VDI & Cloud Gaming: Providing high-performance virtual desktops or gaming experiences from the cloud.

- Scalability: Workloads often require hundreds or thousands of GPUs working in concert. Server GPUs are designed with high-speed interconnects (NVLink, Infinity Fabric) and management tools for large-scale deployment.

- Manageability: Centralized tools for monitoring health, performance, utilization, and deploying updates across vast fleets of GPUs are essential for data center operations.

- Efficiency: Performance-per-watt is crucial in power-hungry data centers. Server GPUs are optimized for computational density and energy efficiency under sustained load.

Conclusion: The Silent Powerhouses

Server GPUs are far more than just “gaming cards in a server.” They are specialized, robust computational engines engineered from the ground up for the relentless demands of the data center. With features like ECC memory, enterprise-grade reliability, advanced virtualization, dense form factors, and software stacks tailored for parallel workloads like AI and HPC, they form the indispensable backbone of cloud computing, scientific discovery, artificial intelligence, and the modern digital enterprise. While they won’t make your games run faster, they are the powerhouses silently shaping the future.