The rapid advancement of artificial intelligence, from large language models (LLMs) that can write poetry to sophisticated scientific simulations, is largely powered by a specialized class of hardware: the Graphics Processing Unit (GPU). In this arena, Nvidia stands as a dominant force, and two of its most powerful offerings, the A100 and its successor, the H100, are the workhorses behind many of today’s AI breakthroughs.

For businesses and researchers venturing into AI, the choice between these two GPUs is a critical one, with significant implications for performance, cost, and project timelines. This article provides a detailed comparison of the Nvidia A100 and H100, with a deep dive into their architectural differences, performance on AI-related tasks, cost-benefit analysis, and guidance on making the right choice for your specific needs.

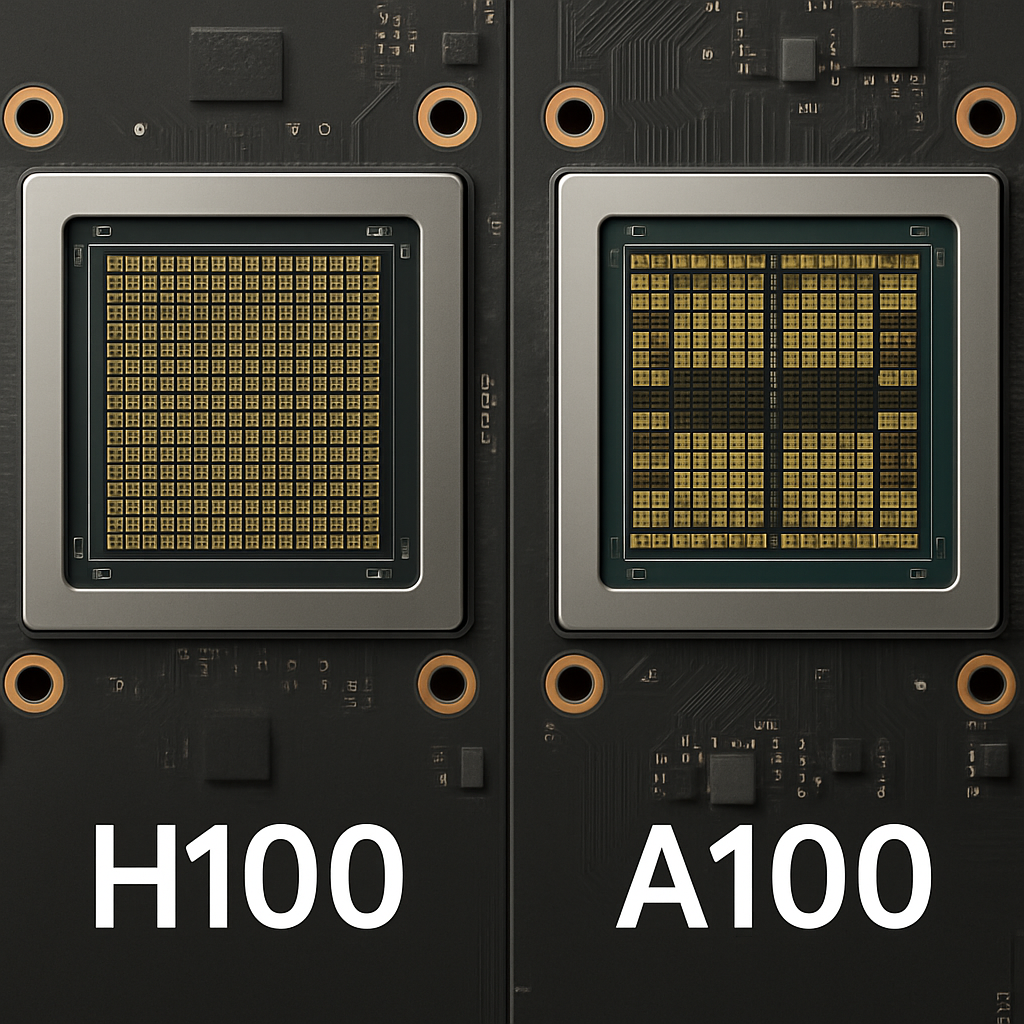

The Contenders: A100 and H100 at a Glance

The Nvidia A100 Tensor Core GPU, launched in 2020, is built on the Ampere architecture. It quickly became the gold standard for AI training and inference, offering a massive leap in performance over its predecessors. It was designed to be a versatile powerhouse, adept at a wide range of high-performance computing (HPC) and AI tasks.

The Nvidia H100 Tensor Core GPU, introduced in 2022, is based on the newer Hopper architecture. It was engineered with a specific focus on the demanding requirements of transformer models, the architecture behind LLMs like GPT-4. The H100 represents a significant generational leap, with architectural innovations aimed at accelerating AI workloads at an unprecedented scale.

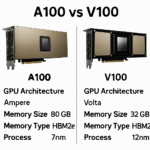

| Feature | Nvidia A100 | Nvidia H100 |

|---|---|---|

| Architecture | Ampere | Hopper |

| Release Year | 2020 | 2022 |

| GPU Memory | Up to 80GB HBM2e | 80GB HBM3 |

| Memory Bandwidth | Up to 2.0 TB/s | Up to 3.35 TB/s |

| Tensor Cores | 3rd Generation | 4th Generation |

| Transformer Engine | No | Yes |

| NVLink | 3rd Generation | 4th Generation |

Architectural Deep Dive: What Sets Them Apart?

While both are formidable GPUs, the H100’s Hopper architecture introduces several key innovations that give it a significant edge, especially for AI.

Tensor Cores and the Transformer Engine

The heart of Nvidia’s AI dominance lies in its Tensor Cores, specialized processing units designed to accelerate the matrix operations fundamental to deep learning. The A100 features third-generation Tensor Cores, which were a major step up in their time.

The H100, however, introduces fourth-generation Tensor Cores and a game-changing feature: the Transformer Engine. This engine is specifically designed to accelerate transformer models, the backbone of modern LLMs. It does this by intelligently managing and dynamically switching between FP8 (8-bit floating-point) and FP16 (16-bit floating-point) precision. This allows for significantly higher throughput with minimal loss in accuracy, a crucial advantage when training and deploying massive language models. The A100 does not have a Transformer Engine and lacks FP8 support.

Memory and Bandwidth

AI models, particularly large ones, are incredibly data-hungry. The speed at which a GPU can access and process this data is a critical bottleneck. The A100 is equipped with up to 80GB of High Bandwidth Memory (HBM2e), offering a respectable memory bandwidth of up to 2.0 TB/s.

The H100 raises the bar with 80GB of HBM3 memory, boosting the memory bandwidth to an impressive 3.35 TB/s. This nearly 70% increase in bandwidth means the H100 can feed data to its cores much faster, which is essential for keeping the powerful new Tensor Cores fully utilized and for handling the massive datasets required for training large models.

CUDA Cores and Other Enhancements

The H100 also boasts a significant increase in the number of CUDA cores, the general-purpose processors on the GPU. This, combined with a second-generation Multi-Instance GPU (MIG) feature, allows for more flexible and efficient partitioning of the GPU for smaller workloads. The fourth-generation NVLink interconnect on the H100 also provides faster communication between GPUs in a multi-GPU setup, which is crucial for large-scale training.

Performance in the Real World: AI and LLM Benchmarks

The architectural improvements in the H100 translate into substantial performance gains in real-world AI applications.

Training Performance

For training large AI models, the H100 is significantly faster than the A100. Nvidia claims that the H100 can offer up to 9x faster AI training on large language models. Independent benchmarks have consistently shown the H100 to be at least 2-4x faster than the A100 for a wide range of deep learning models.

For example, in training models like GPT-3, the combination of the Transformer Engine, HBM3 memory, and fourth-generation Tensor Cores allows the H100 to complete training runs in a fraction of the time it would take an A100.

Inference Performance

The performance gap is even more pronounced in AI inference, the process of using a trained model to make predictions. Here, the H100 can be up to 30x faster than the A100. This massive lead is particularly important for real-time applications like chatbots, recommendation engines, and fraud detection systems, where low latency is critical.

A widely cited benchmark using the Llama 3.1 70B model for inference showed that the H100 SXM5 variant delivered 2.8 times the throughput (tokens generated per second) of the A100 NVLink version. This means a single H100 can handle the workload of nearly three A100s for this specific task.

Major Projects and Performance Testing

These performance differences have been extensively tested by major cloud providers and AI research labs. For instance, the training of large language models by companies like OpenAI, Google, and Meta heavily relies on massive clusters of these GPUs. The performance gains observed in these projects are not just theoretical; they translate to faster research and development cycles and the ability to train larger, more capable models.

Cost vs. Performance: The Billion-Dollar Question

The superior performance of the H100 comes at a higher price.

Upfront and Cloud Pricing

A single H100 GPU can cost upwards of $30,000, while an A100 is typically in the $10,000 – $15,000 range. In the cloud, you can expect to pay around $2.00 – $3.50 per hour for an H100 instance, compared to $1.00 – $2.00 per hour for an A100.

Total Cost of Ownership (TCO)

A simple price comparison can be misleading. A more insightful metric is the Total Cost of Ownership (TCO), which considers the overall cost of a project. Because the H100 can complete tasks much faster, it can actually be more cost-effective for large-scale projects.

For example, if an H100 can train a model in one-third of the time of an A100, even if it costs twice as much per hour, the total cost for the training run will be lower with the H100. This is especially true for large training runs that can take days or even weeks. Furthermore, the H100’s greater performance per watt can lead to significant energy savings in large data center deployments.

How to Make the Choice: A Practical Guide

The decision between the A100 and H100 ultimately depends on your specific needs, budget, and project goals.

When to Choose the H100:

- Cutting-Edge AI Research: If you are working on state-of-the-art AI models, especially large language models, the H100 is the clear choice. Its performance advantages will significantly accelerate your research and development.

- Large-Scale Training: For training massive models that require significant computational resources, the H100’s speed and efficiency will lead to a lower TCO.

- High-Throughput Inference: If you are deploying AI services that require low latency and high throughput, the H100’s inference performance is unmatched.

- Future-Proofing: Investing in the H100 ensures you have the latest and most capable hardware, which will be supported for years to come.

When the A100 is Still a Great Option:

- Budget Constraints: For smaller projects or startups with limited budgets, the A100 offers excellent performance at a more accessible price point.

- General-Purpose AI Workloads: The A100 is a versatile GPU that is still highly capable for a wide range of AI tasks, including computer vision, natural language processing, and recommender systems.

- Mature Ecosystem: The A100 has a very mature software ecosystem, and many AI applications are already optimized for it.

- When Absolute Top-Tier Performance is Not a Strict Requirement: If your application doesn’t demand the absolute fastest performance, the A100 provides a great balance of price and performance.

Conclusion

The Nvidia H100 is undeniably the new king of AI GPUs, offering a monumental leap in performance over the already impressive A100. Its architectural innovations, particularly the Transformer Engine, make it an indispensable tool for anyone working at the forefront of AI, especially with large language models.

However, the A100 is far from obsolete. It remains a powerful and cost-effective solution for a wide range of AI workloads and represents a more pragmatic choice for those with more modest performance requirements or tighter budget constraints.

Ultimately, the best choice depends on a careful evaluation of your specific project needs, budget, and long-term goals. By understanding the key differences in architecture, performance, and cost, you can make an informed decision that will empower your AI journey.