Section 1: The Strategic Asset: Deconstructing the 5x H100 Cluster

The foundation of any technology startup is its core asset. In this case, that asset is a cluster of five NVIDIA H100 GPUs—a concentration of computational power that represents a significant capital investment and a formidable competitive tool. However, to translate this raw power into a viable business, it is imperative to move beyond surface-level specifications and understand the asset’s precise capabilities, its inherent limitations, and its optimal strategic application. This cluster is not a miniature version of a hyperscale cloud data center; it is a specialized, high-performance engine uniquely suited for specific, high-value workloads. A granular analysis of its architecture reveals that its primary strength lies not in training massive foundational models from scratch, but in the agile and efficient fine-tuning of existing models and the high-throughput, low-cost serving of inference requests.

1.1 Beyond the Teraflops: Translating H100 Specifications into Business Capabilities

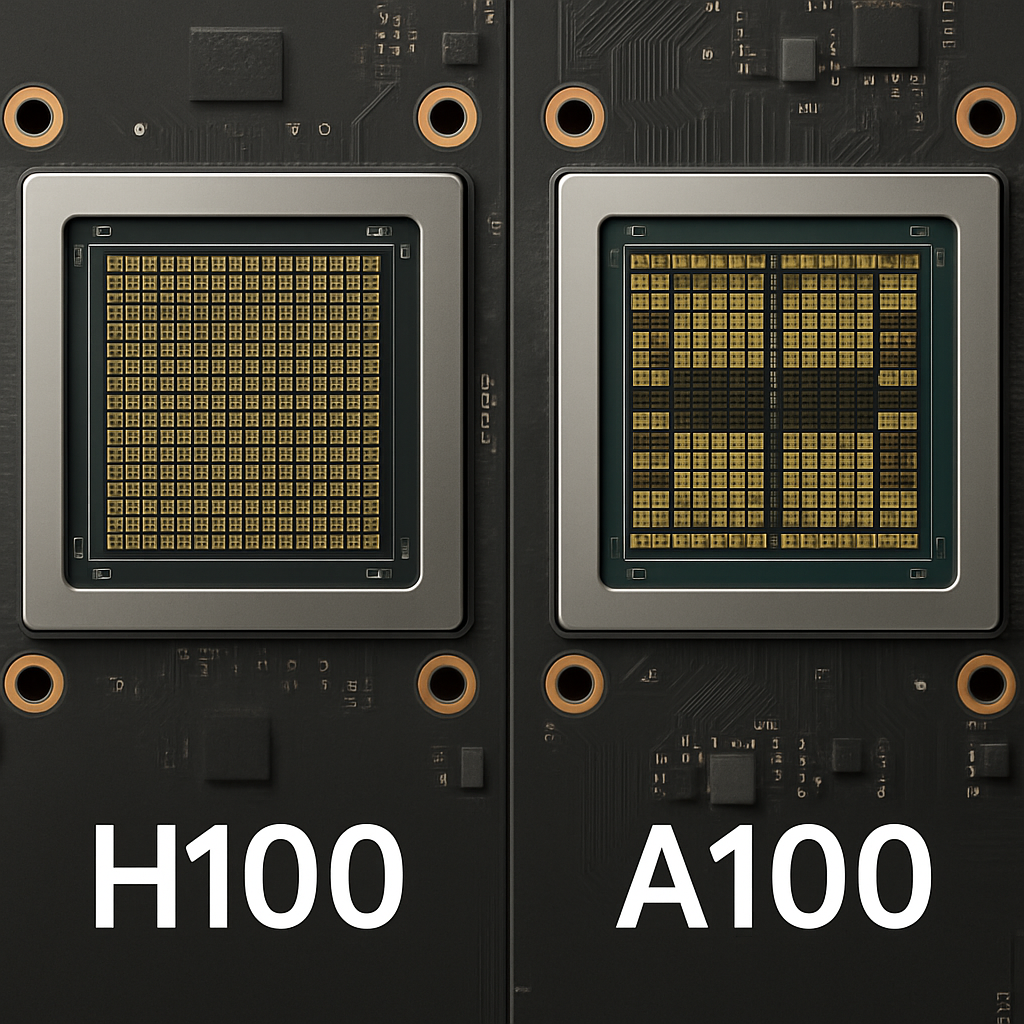

The NVIDIA H100 GPU, built on the Hopper architecture, represents an order-of-magnitude leap in performance for AI and high-performance computing (HPC) workloads compared to its predecessors.1 This advancement is not merely a quantitative increase in processing speed but a qualitative shift in how AI-specific computations are handled. For a startup, understanding these architectural nuances is the first step in crafting a defensible business strategy. The H100 is constructed with 80 billion transistors using a custom TSMC 4N process, making it one of the most advanced chips ever built.3 This engineering feat underpins several key features that directly translate into business capabilities.

The most significant of these features are the fourth-generation Tensor Cores combined with the dedicated Transformer Engine.5 At a fundamental level, training and running modern large language models (LLMs) involves an immense volume of matrix multiply-accumulate (MMA) operations. The H100’s Tensor Cores are purpose-built for this task, delivering twice the MMA computational rates of the previous-generation A100 GPU on equivalent data types.3 This raw architectural advantage is then amplified by the Transformer Engine, which intelligently manages computations in mixed precision, primarily leveraging the new FP8 (8-bit floating point) data format.2

The FP8 precision advantage is a cornerstone of the H100’s economic viability for a startup. It allows the GPU to achieve a staggering 3,026 TFLOPS of performance (with sparsity), a figure that dramatically accelerates both training and inference.1 For training, this means that fine-tuning large models takes significantly less time, with NVIDIA projecting up to 9x faster training for certain Mixture-of-Experts (MoE) models compared to the A100.7 For inference—the process of using a trained model to make predictions—the gains are even more pronounced, with up to 30x higher performance on very large models like Megatron 530B.7 This efficiency translates directly into lower operational costs. By processing more requests in less time and with less energy, a startup can offer more competitive pricing for its services, a crucial differentiator in a market where compute cost is a primary concern.8 The Transformer Engine dynamically switches between FP8 and FP16 precision at each layer of a model, preserving accuracy while maximizing the throughput and memory savings of FP8.5

This computational efficiency is supported by a formidable memory subsystem. Each H100 GPU is equipped with 80GB of high-bandwidth memory (HBM), specifically HBM2e in the PCIe version and faster HBM3 in the SXM version.3 This memory provides up to 2 TB/s of bandwidth in the PCIe card and 3.35 TB/s in the SXM module.10 This high capacity and speed are critical for two reasons. First, it allows larger models to be loaded into a single GPU’s memory, which is essential for both fine-tuning and inference. Second, it enables the use of larger batch sizes during training, a technique that improves computational throughput and can accelerate the fine-tuning process.5 The 50 MB of L2 cache further complements this, reducing the need to access the main HBM memory and speeding up data access.3

Finally, the second-generation Multi-Instance GPU (MIG) technology provides a vital mechanism for maximizing asset utilization.1 MIG allows a single H100 to be securely partitioned into as many as seven independent, isolated GPU instances, each with its own dedicated memory (e.g., 10GB) and compute resources.7 For a startup, this feature is a powerful tool for revenue optimization. During periods when the full power of a GPU is not required for a large, singular task, it can be divided to serve multiple smaller clients, host several different models for inference, or provide development sandboxes simultaneously.13 This transforms potentially idle hardware from a cost center into a continuous source of revenue, smoothing out the financial peaks and valleys of project-based work.

| H100 Feature | Technical Specification | Direct Business Capability | Source(s) |

| 4th-Gen Tensor Cores w/ FP8 | 3,026 TFLOPS (PCIe, with sparsity) | Dramatically lower cost-per-inference and faster fine-tuning cycles, enabling competitive pricing. | 5 |

| 80GB HBM Memory | 2.0 TB/s (PCIe HBM2e) – 3.35 TB/s (SXM HBM3) | Ability to train and serve larger, more complex models; increased throughput via larger batch sizes. | 1 |

| 4th-Gen NVLink | 600 GB/s (PCIe) – 900 GB/s (SXM) | Efficient multi-GPU scaling for tasks that exceed single-GPU memory, such as fine-tuning 70B+ models. | 7 |

| 2nd-Gen Multi-Instance GPU (MIG) | Up to 7 instances @ 10GB each | Maximized GPU utilization; ability to serve multiple clients or models simultaneously from one GPU. | 1 |

1.2 The Power of Five: Multi-GPU Performance and the Criticality of NVLink

A cluster of five H100s is significantly more powerful than five individual GPUs operating in isolation. The key to unlocking this collective power lies in the interconnect technology, which facilitates communication between the GPUs. The efficiency of this communication directly determines the types of problems the cluster can solve and, therefore, the types of services the startup can offer.

The primary interconnect is NVIDIA’s fourth-generation NVLink, a high-speed, direct GPU-to-GPU communication fabric.15 The PCIe version of the H100 provides 600 GB/s of NVLink bandwidth, while the SXM version offers a blistering 900 GB/s.1 This is a substantial advantage over the standard PCIe Gen5 bus, which provides 128 GB/s of bandwidth for communication with the CPU and the rest of the system.3 This high-speed interconnect is not a luxury; it is a necessity for modern AI workloads. Many state-of-the-art LLMs are too large to fit into the 80GB memory of a single GPU. To train or run these models, they must be split across multiple GPUs using techniques like model parallelism (where different layers of the model reside on different GPUs) or tensor parallelism (where individual operations are split across GPUs). Both techniques require constant, high-speed data exchange between the GPUs, and the low latency and high bandwidth of NVLink are essential to prevent the interconnect from becoming a crippling bottleneck.15 When combined with NVSwitch technology in larger server configurations, this allows for a non-blocking fabric where all GPUs can communicate at full speed, which is crucial for collective operations like AllReduce that are common in distributed training.15

This brings to light a critical, yet unspecified, detail about the startup’s hardware: the form factor of the H100s. The GPUs are available in two main variants: PCIe and SXM.12 The PCIe version is a standard card that can be installed in a wide range of servers, offering flexibility and compatibility.10 The SXM version is a specialized module designed for high-density, high-performance server boards like NVIDIA’s own HGX platform.11 The performance difference between a cluster of PCIe cards and a cluster of SXM modules is substantial, particularly for the multi-GPU tasks that are central to a high-end AI startup.

The SXM H100 not only has faster NVLink (900 GB/s vs. 600 GB/s) but also higher memory bandwidth (3.35 TB/s vs. 2.0 TB/s) and a higher power budget (up to 700W vs. 350W).10 MLPerf benchmarks demonstrate this gap starkly: in a multi-GPU configuration, an SXM-based system delivered a 2.6x speedup on LLM inference and a 1.6x speedup on image generation compared to a PCIe-based system.10 This is not an incremental improvement; it fundamentally changes the economics and feasibility of certain services. A startup with five SXM H100s can more effectively tackle the fine-tuning of very large models (e.g., 70B+ parameters) and offer lower-latency inference at scale. A startup with five PCIe H100s, while still immensely powerful, would be better positioned for tasks that are less interconnect-intensive, such as fine-tuning smaller models using data parallelism or hosting a larger number of independent inference workloads. The business plan must be tailored to this specific hardware reality.

It is also crucial to ground this capability in the context of the broader market. A five-GPU cluster, regardless of form factor, cannot compete with the massive-scale infrastructure used to train foundational models from scratch. For instance, xAI reportedly used a supercomputer with 100,000 H100s for its work.18 The startup’s strategic position is not to be a builder of foundational models, but a customizer and deployer of existing ones. Its competitive advantage will not be raw scale, but agility, specialization, and efficiency.

1.3 The Strategic Conclusion: An Engine for Fine-Tuning and Inference

Synthesizing the technical capabilities of the 5x H100 cluster leads to a clear strategic directive. The H100 is a versatile accelerator, excelling at both training and inference workloads.19 However, given the cluster’s size and the competitive landscape, its optimal use is not for pre-training large models from the ground up, but for the highly valuable tasks of

fine-tuning and specialized inference.

Fine-tuning is the process of taking a powerful, pre-trained open-source model (like Llama 3 or Mistral) and further training it on a smaller, domain-specific dataset.20 This adapts the model to a specific task, style, or knowledge base, transforming a generalist tool into a specialist expert. For example, a general LLM can be fine-tuned on a corpus of legal documents to become an expert in contract analysis. This process is computationally far less demanding than pre-training but creates immense value by producing a proprietary AI asset tailored to a client’s unique needs.4 The 5x H100 cluster is perfectly sized and architected for this work, capable of handling the fine-tuning of even 70B+ parameter models in a reasonable timeframe.9

On the inference side, the cluster’s value proposition is its efficiency. The combination of the Transformer Engine, FP8 support, and high-bandwidth memory allows an H100 to deliver inference with significantly higher throughput and lower latency than previous-generation GPUs.6 A single H100 can process nearly double the number of tokens per second as an A100 for similar models, effectively supporting twice the number of concurrent users or requests.6 This efficiency advantage means the startup can host specialized or fine-tuned models and offer API access at a price point that can be more attractive than larger, less specialized cloud providers. The ability to serve these models from a secure, private cluster also addresses the critical data privacy concerns prevalent in many high-value industries.

In essence, the 5x H100 cluster is a high-performance engine for AI customization. It provides the means to take powerful but generic open-source models and forge them into specialized, proprietary tools that solve specific business problems, and then to deploy those tools for clients in a highly efficient and secure manner.

Section 2: Foundational Business Models for Boutique AI Infrastructure

With a clear understanding of the 5x H100 cluster’s capabilities as a specialized engine for fine-tuning and inference, the next step is to map these technical strengths onto viable commercial strategies. A startup with this asset should not attempt to compete with hyperscale cloud providers on raw infrastructure rental. Instead, it must leverage its unique advantages—efficiency, specialization, and the ability to offer secure, high-touch services—to build a defensible business. Three foundational models stand out as particularly well-suited to this boutique AI infrastructure: Fine-Tuning as a Service (FTaaS), the Niche AI-Powered SaaS, and Specialized Inference Hosting. These models are not mutually exclusive; in fact, their synergy forms the basis of a powerful, phased growth strategy.

2.1 Model 1 – The Specialist: Fine-Tuning as a Service (FTaaS)

The most direct path to monetizing the H100 cluster is to offer Fine-Tuning as a Service (FTaaS). This is a high-touch, consultative business model where the startup acts as an expert partner, helping other companies unlock the value of their proprietary data by creating custom AI models. Many organizations possess valuable internal knowledge—legal contracts, customer support logs, scientific research, financial reports—but lack the in-house AI expertise or computational hardware to leverage it effectively.21 The FTaaS startup bridges this gap.

The service offering extends beyond simply running a training script. A comprehensive FTaaS engagement includes several key stages. It begins with Consultation & Data Strategy, where the startup works with the client to identify a high-ROI use case, select the most appropriate open-source base model, and, most importantly, guide the client in preparing, cleaning, and annotating their proprietary dataset for the fine-tuning process.21 This initial phase is critical, as the quality of the final model is highly dependent on the quality of the training data.

The core of the service is the fine-tuning itself. The startup can offer a spectrum of techniques tailored to the client’s budget and performance requirements. This ranges from Full Fine-Tuning, which updates all the weights of the base model for maximum adaptation, to more computationally efficient methods known as Parameter-Efficient Fine-Tuning (PEFT).24 Techniques like LoRA (Low-Rank Adaptation) and QLoRA (Quantized LoRA) modify only a small subset of the model’s parameters, drastically reducing the computational cost and time required for training, making them ideal for a 5-GPU cluster.21

Finally, the service includes Evaluation & Deployment. The startup must rigorously test the newly fine-tuned model against baseline metrics to demonstrate its improved performance on the specific task.24 Following successful evaluation, the startup can help the client deploy the model, either by packaging it for use in the client’s own environment or, more strategically, by hosting it on the startup’s own infrastructure, creating a recurring revenue opportunity.

The target market for FTaaS consists of small to medium-sized enterprises (SMEs) or specialized departments within larger corporations that recognize the potential of AI but are constrained by technical resources. These clients understand the value of their data and are willing to pay a premium for a service that can transform it into a competitive advantage.21

Pricing for FTaaS is typically project-based for the initial engagement, reflecting the high-value intellectual property being created. This can be followed by a recurring revenue model, such as a monthly retainer for ongoing model maintenance, monitoring, and periodic re-training as new data becomes available. Alternatively, some platforms are beginning to price fine-tuning based on the number of tokens processed. For example, the platform FinetuneDB charges between $2.00 and $6.00 per million tokens for fine-tuning various Llama 3 models, providing a market benchmark for this type of service.25

2.2 Model 2 – The Vertical Integrator: Building a Niche AI-Powered SaaS

A more ambitious and potentially more scalable model is to become a vertical integrator, building a complete AI-powered Software-as-a-Service (SaaS) product for a specific industry niche. In this model, often referred to as an “AI wrapper,” the startup identifies a painful, recurring problem within a vertical and develops a full-stack solution to solve it.26 The 5x H100 cluster serves as the internal R&D and production engine, used to fine-tune and run the proprietary AI model that forms the core of the SaaS offering.

The strategy hinges on deep vertical focus. The most successful AI SaaS products do not try to be general-purpose tools. They target “high-touch” industries with complex, domain-specific workflows where generic models like the public version of ChatGPT fall short.27 The startup’s value proposition is not just access to AI, but access to an AI that understands the unique jargon, processes, and challenges of a specific profession, such as legal, finance, or biotech. The startup owns the entire customer experience, from the user interface to the underlying model, allowing it to create a tightly integrated and highly valuable product.

Case studies of this approach validate its potential. Morgan Stanley, for example, built an internal AI assistant for its wealth management advisors by training GPT-4 exclusively on its own vast repository of proprietary research reports. This laser-focused approach led to 98% adoption among advisors because it solved a specific, critical pain point: rapidly accessing and synthesizing internal knowledge.28 Similarly, the startup Jenni AI found success by building a tool specifically for academic research and writing, a niche with distinct needs.26 AI automation agencies are also finding traction by building repeatable solutions for specific verticals like recruitment, real estate, and consulting, which often involve complex lead qualification and appointment booking workflows.27

Pricing for a Niche SaaS product typically follows standard industry models. This can include tiered monthly or annual subscriptions (e.g., a Basic plan for individuals, a Pro plan for teams, and an Enterprise plan with advanced features and security).26 Alternatively, a usage-based model could be employed, where customers pay based on the number of API calls, documents processed, or reports generated. This aligns the cost directly with the value the customer receives.26

2.3 Model 3 – The Enabler: High-Performance, Specialized Inference Hosting

The third model is more infrastructure-centric, focusing on providing high-performance, specialized inference hosting as a service. This goes a step beyond generic cloud GPU rental (like AWS or GCP) or even commodity GPU marketplaces (like Vast.ai). Here, the startup leverages its deep expertise and the unique efficiency of the H100 cluster to offer a superior hosting experience for a curated set of AI models.

The core value proposition is performance and cost-efficiency for deployed models. The startup would offer fully managed, low-latency, and high-throughput API endpoints for popular open-source models like Llama 3, Mistral, and Falcon.31 More importantly, it would also host the custom models fine-tuned for clients under the FTaaS model, providing a seamless path from customization to production. The H100’s architectural advantages, particularly the Transformer Engine and FP8 support, allow the startup to serve these models at a lower cost and with higher performance than competitors running on older hardware.6

Executing this model requires a sophisticated technical stack. The startup would need to master deployment and optimization tools like NVIDIA’s Triton Inference Server, which is designed for high-performance inference at scale.33 It would also employ open-source serving solutions like vLLM, which is highly regarded for its efficiency.24 The entire stack would be built on containerization technologies like Docker and orchestrated with Kubernetes to ensure scalability and reliability.22 A key technical advantage would be the implementation of advanced batching techniques. For example, Triton’s “in-flight batching” (also known as continuous batching) allows the server to process multiple requests concurrently, dynamically adding and removing sequences from a batch. This maximizes GPU utilization and can more than double the throughput compared to simple static batching, directly translating to higher capacity and lower costs.23

The target market for this service consists of developers, startups, and businesses that need to integrate a specific AI model into their application but want to avoid the significant cost and complexity of purchasing and managing their own GPU infrastructure. While the market for GPU hosting is competitive, with players like Runpod, Together AI, and Hyperstack offering low hourly rates 35, the startup can differentiate itself. It can do so by guaranteeing superior performance on specific, high-demand models, offering white-glove support, and providing a secure, private hosting environment that addresses the data privacy concerns of enterprise clients.

Pricing would follow the industry standard for inference APIs: a pay-as-you-go model based on the number of tokens processed, with separate rates for input and output tokens. Market rates from platforms like FinetuneDB provide a useful benchmark: Llama-3-8B inference is priced around $0.30 per million tokens, while the much larger Llama-3-70B is priced around $1.10 per million tokens.25 The startup could establish premium pricing tiers for highly specialized or proprietary fine-tuned models that deliver greater business value.

The strategic interplay between these three models offers a powerful roadmap for the startup. An initial focus on FTaaS (Model 1) generates immediate revenue and provides invaluable market intelligence and data from a specific vertical. This expertise and data then become the defensible foundation for building a Niche SaaS product (Model 2). The custom models developed for these clients can then be hosted on the startup’s own optimized infrastructure (Model 3), creating a sticky, recurring revenue stream. This phased approach allows the business to bootstrap its way from a service-oriented company to a product-led company, systematically de-risking its growth and building a multi-layered competitive moat based on expertise, proprietary data, and superior performance. This strategy also reframes the pricing conversation away from the commoditized cost of compute-hours and towards the high-value solutions being delivered.

| Business Model | Primary Value Proposition | Target Customer | Revenue Model | Key Challenges | Path to Defensibility |

| Fine-Tuning as a Service (FTaaS) | Customization & Domain Expertise | Enterprises or SMEs without an in-house AI team but with valuable proprietary data. | Project-based fees for initial fine-tuning; recurring retainers for maintenance. | Long sales cycles, scaling the service-heavy model, client education. | Proprietary fine-tuned models, deep vertical expertise, trusted advisor status. |

| Niche AI-Powered SaaS | End-to-End Workflow Solution | Professionals in a specific, underserved industry vertical (e.g., legal, biotech). | Recurring monthly/annual SaaS subscriptions (tiered or usage-based). | Achieving product-market fit, high customer acquisition costs, user churn. | Network effects within the niche, deep workflow integration (high switching costs), proprietary data flywheel. |

| Specialized Inference Hosting | Performance, Security & Cost-Efficiency | Developers and businesses needing to deploy models without managing infrastructure. | Pay-per-token API usage fees. | Intense competition from larger players, price commoditization, maintaining a performance edge. | Superior performance on curated models, exceptional developer experience, focus on secure/private hosting. |

Section 3: Market Deep Dive: High-Opportunity Verticals for a 5x H100 Startup

The success of a boutique AI startup hinges on applying its powerful computational asset to the right market. The ideal vertical is one characterized by complex, data-rich problems, a high value placed on data privacy and security, and a clear inadequacy of generic, off-the-shelf AI solutions. By focusing on such a niche, a startup with a 5x H100 cluster can build a defensible business that larger, more generalized competitors cannot easily replicate. The following analysis explores four high-opportunity verticals—Legal Technology, Finance & Fintech, Biotechnology & Drug Discovery, and Advanced Manufacturing—with a specific emphasis on the unique “leapfrog” opportunities present in the rapidly digitizing Indian market.

3.1 Vertical Analysis: AI in Legal Technology

The legal industry operates on a foundation of unstructured text, from contracts and court filings to discovery documents and internal communications. Law firms face immense pressure to increase efficiency and reduce costs, yet they are bound by stringent requirements for client confidentiality and data security.36 This creates a fundamental tension: while AI tools could dramatically improve productivity, using public cloud APIs like those from OpenAI or Google risks exposing sensitive client data, a non-starter for most firms.37 This “privacy gap” creates a perfect entry point for a startup with its own secure hardware.

A startup can address this by offering a Niche SaaS or FTaaS platform for legal document analysis that can be deployed on-premise at the law firm or within a secure private cloud. The 5x H100 cluster would be used to fine-tune a powerful open-source model, such as an Apache 2.0-licensed Mistral model, on a vast corpus of legal-specific documents, including jurisdiction-specific case law and statutes.36 This creates a proprietary legal LLM that understands the nuances of legal language far better than any generalist model.

Key features of such a platform would directly target the most time-consuming tasks for legal professionals:

- AI-Powered Document Review: Automating the process of due diligence by scanning thousands of pages of contracts or discovery documents to extract key clauses, identify inconsistencies, and flag potential risks or non-standard language.36

- Intelligent Legal Research: Moving beyond simple keyword search to provide semantic understanding of legal queries. A lawyer could ask a question in natural language, and the system would retrieve the most relevant precedents from decades of case law, understanding the context and intent of the query.36

- Litigation Analytics: By analyzing historical court data, the tool could offer predictive insights into case timelines, potential outcomes based on jurisdiction and presiding judge, and the strategies of opposing counsel.39

- Automated Summarization: A highly valuable feature would be the ability to generate concise summaries of lengthy depositions, complex email chains, or dense case files, allowing lawyers to grasp the core facts in minutes rather than hours.41

The Indian context makes this vertical particularly attractive. The Indian legal system is grappling with a backlog of over 50 million pending cases, creating an urgent, systemic need for efficiency-boosting technologies.40 The market has already been validated by the emergence of Indian legal-tech startups like BharatLaw.ai, CaseMine, and SpotDraft, which are gaining traction.36 Furthermore, the Indian judiciary itself is showing a willingness to adopt AI, with initiatives like SUPACE (Supreme Court Portal for Assistance in Court Efficiency) and SUVAAS (a tool for translating judgments into regional languages), signaling a top-down acceptance of technological innovation.40

3.2 Vertical Analysis: AI in Finance & Fintech

The financial services industry is intensely data-driven, highly regulated, and a constant target for sophisticated fraud. This creates a perpetual demand for tools that can enhance personalization, automate compliance, manage risk, and improve operational efficiency.42 The 5x H100 cluster is an ideal asset for building and deploying a suite of specialized financial models that outperform generic solutions.

A startup in this space could pursue a Specialized Inference Hosting or FTaaS model, focusing on creating and providing API access to fine-tuned financial LLMs. The foundation could be open-source projects like FinGPT, which focuses on financial sentiment analysis, or FinRobot, an AI agent platform for financial analysis.44 The startup would use its H100s to fine-tune these models on massive, real-time datasets of market data, news feeds, regulatory filings, and social media chatter.

The service offerings would be tailored to the specific needs of banks, investment firms, and fintech companies:

- Real-time Risk Management: A service that continuously analyzes market data and news to provide dynamic risk assessments, identifying potential threats before they become critical issues.43

- Automated Compliance Monitoring: A SaaS tool that tracks regulatory updates in real-time and can scan internal communications (e.g., trader chats) to flag potential compliance breaches, a major operational headache for financial institutions.36

- Superior Financial Sentiment Analysis: An API that provides nuanced, accurate sentiment analysis on financial news and social media, a key input for algorithmic trading strategies. FinGPT has demonstrated that a well-tuned open-source model can outperform even GPT-4 on this task.45

- Robo-Advisory Augmentation: Providing AI-driven market insights and analysis to wealth management platforms, helping them generate more sophisticated and personalized investment recommendations for their clients.46

The Indian market for financial AI is booming. A recent survey found that 74% of Indian financial firms have already initiated Generative AI projects.48 The Indian fintech market is valued at an estimated $90 billion, and the government and the Reserve Bank of India (RBI) are proactively establishing regulatory frameworks for AI, signaling a mature and supportive ecosystem.46 Indian companies are already seeing massive returns; Bajaj Finance, for instance, saved ₹150 crore (approximately $18 million) in a single year by deploying AI bots.48 Furthermore, AI is seen as a key enabler of financial inclusion in India, with fintechs using AI-driven analytics to assess creditworthiness for unbanked populations based on alternative data, leapfrogging traditional credit systems.43

3.3 Vertical Analysis: AI in Biotechnology & Drug Discovery

The process of discovering and developing new drugs is notoriously slow, expensive, and fraught with failure.51 AI has the potential to revolutionize this field by dramatically accelerating research and reducing costs. However, many smaller biotechnology firms and academic research labs, while possessing incredibly valuable proprietary biological and chemical data, lack the specialized computational resources and ML expertise to apply AI effectively.52 This creates a clear opportunity for an

FTaaS or Niche SaaS startup focused on “AI-as-a-Service for Drug Discovery.”

The startup’s core offering would be to take a client’s unique data—be it genomic sequences, molecular structures, or cell microscopy images—and use the 5x H100 cluster to fine-tune or train a model that can uncover novel biological insights. The privacy and security of this data are paramount, making the startup’s private hardware a key selling point.

Potential services include:

- Genomic Data Analysis: Fine-tuning models to interpret complex Next-Generation Sequencing (NGS) data. This could help identify genetic markers for diseases, predict a patient’s likely response to a particular therapy, or uncover novel drug targets.51

- AI-Powered Compound Screening: Using models to predict how millions of virtual chemical compounds might interact with a specific protein target, allowing researchers to screen for promising drug candidates in silico, saving years of wet-lab experimentation.51

- Advanced Medical Imaging Analysis: Fine-tuning multimodal vision models on a client’s pathology or radiology images to create highly accurate diagnostic tools for diseases like cancer or tuberculosis.54

India is rapidly emerging as a global hub for AI in biotechnology. The country now ranks among the top five globally for AI-biotech patents and venture capital funding.54 The government is actively fostering this growth through initiatives like the National AI Mission and the India AI-Biotech Hub.54 This has given rise to a vibrant ecosystem of successful startups like Qure.ai, which provides AI-powered medical imaging diagnostics in over 60 countries, and Bugworks Research, which uses AI for designing new drugs to combat antimicrobial resistance.54 This domestic success, combined with India’s vast genetic diversity and cost-effective R&D talent, makes it a prime market for an AI-biotech service provider.54

3.4 Vertical Analysis: AI in Advanced Manufacturing & Quality Control

Quality control in manufacturing has traditionally been a major bottleneck, often relying on slow, inconsistent manual inspection or rigid, rule-based machine vision systems that can only detect pre-defined, known defects.58 This approach is inefficient, costly, and incapable of identifying new or unexpected production flaws. This presents a significant opportunity for a

Niche SaaS startup to develop a next-generation, AI-powered visual quality control platform.

The core of this product would be an unsupervised anomaly detection model. Instead of being trained on thousands of images of “bad” parts, the model would be trained on images of “perfect” products from a manufacturing line. It would learn the normal state of a product in minute detail and then flag anything that deviates from that norm in real-time.58 This allows the system to catch “unknown unknowns”—novel defects it has never seen before—which is a transformative capability for quality assurance.

The business model would involve training and fine-tuning these complex models on the central 5x H100 cluster. Then, a smaller, optimized version of the model (using techniques like quantization) would be deployed on edge computing devices directly on the factory floor.58 This edge-native architecture is critical, as it allows for real-time inference at line speed without the latency associated with sending image data to the cloud for analysis. The system could be further enhanced by integrating multimodal vision-language models, which could not only flag a defect but also generate a natural language description of the anomaly for human operators, providing valuable context for remediation.58

The Indian manufacturing sector is an ideal target market for such a solution. A staggering 99% of Indian manufacturers plan to invest in AI and machine learning in the near future.59 They are already demonstrating a high degree of digital maturity, utilizing 53% of their collected data effectively—a rate that surpasses the global average of 44%.59 Government initiatives like “Make in India” and the Production Linked Incentive (PLI) scheme are driving enormous investment into advanced manufacturing across sectors like automotive, aerospace, and electronics, creating a massive addressable market for quality control solutions.60 The focus on improving production efficiency, reducing waste, and enhancing product quality is a top strategic priority for these companies.62

Section 4: The Open-Source Arsenal: Selecting and Deploying Your Foundational Models

For a startup built on a 5x H100 cluster, the open-source AI ecosystem is not just a resource; it is the arsenal from which its core intellectual property will be forged. The choice of a foundational model is one of the most critical strategic decisions the company will make, with profound implications for performance, cost, and long-term business viability. This decision must be based not only on technical benchmarks but also on a rigorous analysis of the legal and commercial constraints imposed by each model’s license. A failure to navigate this legal gauntlet can render a technically brilliant product commercially unviable.

4.1 Choosing Your Foundation: A Comparative Analysis of Commercially Viable LLMs

The landscape of open-source LLMs is dynamic, with a handful of leading models offering a strong foundation for commercial applications. A 5x H100 cluster is powerful enough to fine-tune even very large models, making several top-tier options accessible.

- Meta’s Llama Series (Llama 3.1, 3.2): Meta’s Llama models are widely regarded as the most capable and complete general-purpose open-source LLMs available.31 They are released in a range of sizes, from a nimble 8B parameters to a powerful 405B variant. For a 5x H100 cluster, the 70B parameter model represents a “sweet spot,” offering a balance of high performance and manageable fine-tuning requirements.32 Llama models consistently perform at or near the top of open-source leaderboards and are supported by a robust ecosystem of tools. However, their use is governed by the Llama Community License, a custom, source-available license with specific commercial restrictions that must be carefully considered.63

- Mistral AI’s Models (Mistral 7B, Mixtral 8x7B): Mistral AI has earned a strong reputation for producing models that are exceptionally efficient, delivering high performance at smaller parameter counts.31 The original Mistral 7B model is a standout for its speed and capability in resource-constrained environments. More advanced models like Mixtral 8x7B and the newer Mixtral 8x22B utilize a sparse Mixture-of-Experts (MoE) architecture. In an MoE model, only a fraction of the total parameters (the “experts”) are activated for any given input token, resulting in dramatically faster inference speeds—up to 6x faster than a dense model of equivalent size—while maintaining high quality.32 Crucially, Mistral’s most popular open models are released under the highly permissive Apache 2.0 license, making them a very attractive option from a commercial and legal perspective.67

- TII’s Falcon Models (Falcon 40B, 180B): The Falcon series of models, developed by the UAE’s Technology Innovation Institute (TII), are also top performers, noted for being trained on the high-quality, extensively filtered “RefinedWeb” dataset.69 The Falcon 180B model, in particular, has demonstrated performance competitive with proprietary models like Google’s PaLM-2.70 While technically powerful, Falcon models come with a significant commercial caveat. Their license, while based on Apache 2.0 principles, includes a unique clause requiring users who wish to make commercial use of the models to apply to TII for permission and potentially pay a royalty on attributable revenue, with a default rate of 10%.71 This royalty obligation is a major financial consideration that could heavily impact a startup’s business model.

- Other Key Players: The ecosystem also includes other strong contenders. Alibaba’s Qwen series is notable for its excellent multilingual and multimodal capabilities.31DeepSeek has released powerful models, including a 671B parameter MoE model, for companies looking to experiment at the frontier scale.31 The choice among these depends on the specific needs of the startup’s target vertical—for example, Qwen would be a strong choice for an application requiring broad international language support.

4.2 Beyond Text: Leveraging Multimodal and Code-Generation Models

The most valuable and defensible business opportunities often lie at the intersection of different data types. The open-source community has produced a wealth of powerful models that go beyond simple text generation, enabling a startup to build more sophisticated and compelling products.

- Multimodal Vision Models: These models can process and understand both images and text, a capability that is crucial for the verticals identified in Section 3. High-quality open-source options like Llama 3.2 Vision, Mistral’s Pixtral, Alibaba’s Qwen-VL, and Microsoft’s Florence-2 are now readily available, many under the permissive Apache 2.0 license.74 A startup could use these models to build a manufacturing quality control system that not only detects a visual defect but also describes it in text; a legal tech tool that can analyze a contract containing both text and diagrams; or an e-commerce platform with powerful visual search capabilities. The availability of these models means a startup can build advanced multimodal applications without relying on the proprietary APIs of Google (Gemini) or OpenAI (GPT-4V).75

- Code Generation Models: For startups targeting the developer tools market, a range of specialized code-generation models are available. Models like Meta’s Code Llama, Alibaba’s Qwen2.5-Coder, and DeepSeek-Coder have been fine-tuned on massive code repositories and excel at tasks like code completion, debugging, and translation between programming languages.77 Performance is often measured against benchmarks like HumanEval, which tests a model’s ability to generate functionally correct code.77 A startup could use these models to build a highly specialized code assistant for a particular programming framework, a tool for automatically generating UI components from a natural language description, or an internal productivity tool for a large software development enterprise.

4.3 The Legal Gauntlet: Navigating Commercial Use Licenses

The license under which a model is released is as important as its performance benchmarks. It dictates what a startup can and cannot do with the model, its modifications, and its outputs. This is a non-negotiable aspect of due diligence.

- Permissive Licenses (Apache 2.0, MIT): These are the “gold standard” for a commercial startup. Licenses like Apache 2.0, used by Mistral for its open models and by the Falcon models (before the commercial use clause kicks in), grant broad permissions. They allow for commercial use, modification, distribution, and sublicensing with very few restrictions, typically only requiring that the original copyright and license notices be retained.67 This provides maximum flexibility and minimizes legal risk for the business.

- Source-Available with Use-Based Restrictions (Llama 3 Community License): Meta’s license for Llama 3 is a custom, “source-available” license. It is permissive for most uses, allowing for commercial deployment and the creation of derivative works.64 However, it contains two critical restrictions. First, any company whose products or services have more than 700 million monthly active users (MAU) must request a special, separate license from Meta.64 While not an immediate concern for a startup, this has implications for long-term scale. Second, and more critically, the license explicitly prohibits using Llama models or their outputs to “improve any other large language model” (excluding Llama itself).66 This clause is a “poison pill” for potential acquisitions. A large tech company like Google or Microsoft would be unable to acquire a Llama-based startup and integrate its technology or data into their own foundational models (e.g., Gemini or GPT) without violating the license. This could severely limit the startup’s exit opportunities and depress its valuation.

- Royalty-Bearing “Open” Licenses (TII Falcon License): The license for TII’s Falcon models introduces a direct financial obligation. While it allows for research and small-scale commercial use, any organization wishing to make significant “Commercial Use” (defined by revenue attributable to the model) must apply to TII for a commercial license. This license may come with a royalty payment, with the default rate specified as 10% of attributable revenue for revenues exceeding $1 million.71 This is a substantial and potentially complex financial commitment that must be factored into the startup’s long-term business plan.

- Non-Commercial Licenses (e.g., CC-BY-NC-SA): Several models, often those tuned on specific datasets, are released under licenses that explicitly forbid commercial use. For example, the MPT-30B-Chat model uses a Creative Commons Non-Commercial license.69 These models are valuable for research and experimentation but are strictly off-limits for a for-profit startup and must be avoided.

The choice of a foundational model is therefore a complex trade-off between technical performance, business flexibility, and financial obligation. While a model like Llama 3 might offer top-tier performance, the restrictions in its license present a tangible business risk. A model like Mistral’s Mixtral 8x7B, with its Apache 2.0 license, may offer slightly different performance characteristics but provides far greater commercial freedom and a wider range of strategic options for the future. For most startups, especially those with ambitions of being acquired, the legal and commercial freedom afforded by a truly permissive license like Apache 2.0 often outweighs a marginal difference in benchmark scores.

| Model Family | Recommended Model Size for 5xH100 | Key Strengths | License Type | Key Commercial Obligations/Restrictions | Ideal Startup Vertical |

| Llama 3 | 70B | Excellent general-purpose performance, strong ecosystem. | Llama 3 Community License | 700M MAU limit; Prohibits using outputs to improve competing LLMs. Attribution required. | General SaaS, Internal Enterprise Tools (where acquisition by a major LLM provider is not the primary goal). |

| Mistral (Open) | Mixtral 8x7B | High efficiency and inference speed (MoE architecture). | Apache 2.0 | Attribution only. | Low-latency Inference Hosting, Niche SaaS (especially where a future acquisition is a possibility). |

| Falcon | 40B | High-quality training data, strong on structured tasks. | TII Falcon License (based on Apache 2.0) | Must apply for commercial use; potential 10% revenue royalty over $1M. | Data-heavy Enterprise (e.g., Finance, Legal) for companies willing to manage the licensing complexity. |

| Qwen | 72B | Strong multilingual and multimodal capabilities. | Apache 2.0 | Attribution only. | Applications requiring global language support, Multimodal SaaS (e.g., document analysis). |

Section 5: Strategic Recommendations & Go-to-Market Blueprint

The preceding analysis has established the technical capabilities of the 5x H100 GPU cluster, evaluated viable business models, explored high-opportunity verticals, and navigated the complex landscape of open-source AI. This final section synthesizes these findings into a concrete, actionable blueprint for a successful startup. The recommendation is not to pursue all opportunities simultaneously, but to follow a focused, phased approach that builds a defensible competitive moat over time. The hardware asset is the starting point, but the ultimate goal is to build a business whose value resides in its proprietary data, specialized models, and deep domain expertise.

5.1 The Optimal Startup Path: A Niche SaaS for Legal Document Analysis

Based on a comprehensive evaluation of the opportunities, the single most promising and defensible startup to build with a 5x H100 cluster is a Niche SaaS platform for the legal technology vertical. This path is recommended because it optimally aligns the hardware’s strengths with a market need that exhibits several ideal characteristics.

This recommendation is justified by the convergence of key factors:

- High-Value Problem: Legal services are a high-cost center for businesses, and lawyers’ time is extremely valuable. A tool that can demonstrably reduce the hours spent on tedious tasks like document review and research has a clear and quantifiable return on investment, justifying a premium subscription price.36

- Data-Rich and Unstructured Environment: The legal field is built on a massive corpus of unstructured text—contracts, case law, depositions, etc. This is a perfect environment for Large Language Models, which excel at understanding, summarizing, and analyzing such data.40

- Critical Need for Privacy and Security: The paramount importance of client confidentiality creates a powerful demand for secure, private AI solutions. This allows the startup to leverage its privately-owned hardware as a key differentiator, offering on-premise or private cloud deployments that large, public cloud-based AI providers cannot easily match. This “privacy premium” is a significant competitive advantage.37

- Validated and Growing Market: The existence of successful legal-tech startups like SpotDraft and CaseMine in India and CoCounsel in the US validates the market need.40 However, the market is far from saturated. There are numerous opportunities to specialize further by jurisdiction (e.g., focusing solely on Indian corporate law), practice area (e.g., M&A due diligence, IP litigation), or client type (e.g., boutique firms, in-house corporate legal teams).

This strategy follows the “Vertical Integrator” (Model 2) path, with a long-term vision to expand services to include FTaaS (Model 1) and Specialized Inference Hosting (Model 3) specifically for its legal clientele, creating multiple, synergistic revenue streams.

5.2 The First 12 Months: A Phased Go-to-Market Roadmap

A disciplined, phased approach is crucial to de-risk the venture and build momentum. The following 12-month roadmap outlines a path from initial setup to commercial launch.

- Months 1-3 (Setup & Foundation):

- Hardware and Software Configuration: The first priority is to finalize the hardware setup. Assuming the most likely scenario of PCIe cards, the focus will be on ensuring optimal multi-GPU communication by correctly configuring the NVLink bridges between the five H100s. The necessary software stack, including NVIDIA drivers, CUDA Toolkit, Docker with the NVIDIA Container Toolkit, and an orchestration layer like Kubernetes, must be installed and stabilized.20

- Base Model Selection: Select a foundational LLM. The top recommendation is Mistral’s Mixtral 8x7B or a similar model released under the Apache 2.0 license. This choice is strategic: it offers an excellent balance of high performance and inference efficiency, and its permissive license avoids the commercial restrictions and potential M&A complications associated with the Llama license.32

- Initial Data Curation: Begin the process of scraping and curating a high-quality dataset of publicly available legal documents. This would include court records, statutes, and regulatory filings from the target jurisdiction (e.g., the Indian Supreme Court archives).36

- Months 4-6 (MVP Development):

- Initial Fine-Tuning: Utilize the 5x H100 cluster to perform an initial fine-tuning of the selected base model on the curated legal dataset. This will create the first version of the proprietary legal LLM.

- Build the Minimum Viable Product (MVP): Develop a simple, secure web application. The MVP’s core feature should solve one specific, high-pain problem. A strong candidate is an AI-powered contract analysis tool. The user would upload a contract (e.g., an NDA or a lease agreement), and the MVP would return an automated summary, identify key clauses, and flag potential risks or non-standard terms.41

- Months 7-9 (Pilot Program and Data Flywheel Ignition):

- Secure Pilot Partners: Identify and partner with one or two innovative, tech-forward boutique law firms. Offer them free access to the MVP for a limited time.

- Feedback and Iteration: The goal of the pilot is twofold. First, to gather critical user feedback to refine the product and ensure it solves a real-world problem. Second, and more importantly, to gain access to a richer, more diverse set of (fully anonymized and permissioned) legal documents. This is the crucial first turn of the “data flywheel,” where real-world usage data is used to improve the model.

- Months 10-12 (Commercial Launch & Service Expansion):

- Public Launch: Based on the success of the pilot program, launch the commercial SaaS product. Implement a tiered subscription pricing model (e.g., individual lawyer, small firm, enterprise).

- Introduce FTaaS: Begin marketing a premium FTaaS offering to larger firms. This service would involve fine-tuning a dedicated version of the model on that specific firm’s internal documents, hosted in a completely isolated, private environment. This caters to the most security-conscious clients and creates a high-margin revenue stream.

5.3 Building a Defensible Moat: From Compute to Proprietary Assets

The 5x H100 cluster is a powerful starting advantage, but hardware can always be replicated. The long-term defensibility of the startup—its “moat”—must be built from assets that are much harder to copy.

- The Data Flywheel: This is the most critical component of the long-term strategy. The initial SaaS product and subsequent FTaaS projects are not just revenue sources; they are data acquisition channels. Each new client and each new document processed adds to the startup’s unique, proprietary dataset. This ever-growing dataset is used to continuously re-train and improve the core legal LLM, making it more accurate and capable. A better model attracts more users, who in turn provide more data, creating a virtuous cycle that is extremely difficult for a new competitor to break into.

- The Expertise Moat: Over time, by focusing intensely on a single vertical, the founding team and early employees will become unparalleled experts at the intersection of AI and law. They will understand the specific challenges, workflows, and needs of lawyers better than any generalist AI company. This deep domain expertise becomes a core part of the company’s value proposition and is embedded in the product’s design and functionality.

- The Workflow Integration Moat: As the SaaS platform evolves, it will become more deeply embedded into the daily operations of its client firms. By integrating with case management systems, document repositories, and billing software, the product transitions from a simple “tool” to an indispensable part of the firm’s infrastructure. This creates high switching costs, as leaving the platform would mean disrupting established workflows and losing access to a system that has been trained on the firm’s own history and data.28

5.4 Concluding Remarks: Your Asset is a Key, Not a Castle

The ownership of a 5x NVIDIA H100 GPU cluster provides a startup with a rare and powerful advantage in the current technological landscape. It is a key that can unlock the door to a highly valuable, defensible, and scalable business. However, it is crucial to recognize that the hardware itself is not the final destination. It is the means, not the end.

The long-term, defensible “castle” for this venture will not be built from silicon and servers, but from the intangible yet far more valuable assets that the hardware enables. The castle is the proprietary, fine-tuned legal model that understands the law better than any other. It is the unique, ever-expanding dataset acquired through years of focused operation. It is the deep, hard-won domain expertise that allows the company to anticipate and solve the next generation of problems for its clients.

The primary strategic imperative for the founder is to transfer the value of the initial asset from the physical hardware to this intellectual property as quickly and efficiently as possible. The hardware provides the initial velocity, but the data, models, and expertise will provide the sustainable momentum. By following a focused strategy, targeting a high-value niche like legal technology, and systematically building a proprietary data and expertise moat, a startup can leverage this initial computational advantage into a market-leading enterprise.